As multi-core processing continues to become more relevant, having a good understanding of the primitives used to achieve fast and correct concurrent (and ideally parallel) processing is very important.

Personally, I’m pretty confident with Mutexes and channels, especially in Rust where the data protected by a Mutex is actually, you know, protected by it. There is however another set of primitives: Atomics. This primitives has enticed me ever since I watched Herb Sutters excellent talk: Lock-Free Programming (or, Juggling Razor Blades).

The Promise of Atomics

Atomics sound great, they allow you to safely mutate data between threads without having to do any locking, so no blocking on OS calls or whatnot.

The basic idea is that any instruction on an atomic type happens in a single transaction. So other threads will always either see the full result of a transaction, or the state before the transaction, never an in-between value.

Available operations

Usually, the operations supported on these Atomic types are:

- load – Loads the value of the given atomic, “atomic” in the sense that only a real stored value is read, never an in-between value caused by a computation or partial update.

- store – Stores the given value, “atomic” in the sense that other threads will either see the full written value, or the old one.

- fetch_add,_sub,_and,_or_max,_min, etc. (for int types) – Add, subtract, etc. operations. “Atomic” in the sense that only the full result of the operation is stored, and no updates can be missed. I.e. if 10 threads all increment an atomic with value 0, the result will definitely be 10. Usually these operations return the value the atomic previously held.

- compare_exchange – One of the more advanced instructions, checks that the atomic is of a given value, and only if this is the case, stores a new value into it. This is “atomic” in the sense that there may not be an update to the value in-between checking that it is of the right value and storing something in it.

These operations are only individually atomic. A sequence of them is not in itself atomic. So trying to replace a compare_exchange call with a manual check will not be an atomic operation!

In the example below, The result may be:

- Thread 1 is first!

- Thread 2 is first!

- Thread 1 is first!

Thread 2 is first! - Thread 2 is first!

Thread 1 is first!

// Thread 1

if (my_atomic.load(Ordering::Relaxed) == 0) {

// Thread 2 may store "2" into the atomic now

my_atomic.store(1, Ordering::Relaxed);

println!("Thread 1 is first!");

}

// Thread2

if (my_atomic.load(Ordering::Relaxed == 0) {

// Thread 1 may store "1" into the atomic now

my_atomic.store(2, Ordering::Relaxed);

println!("Thread 2 is first!");

}So there may still be operations of other threads in-between the atomic operations of one thread! There can easily be race-conditions with atomics!

The correct Rust implementation would look like this:

// Thread 1

if atomic

.compare_exchange(0, 1, Ordering::Relaxed, Ordering::Relaxed)

.is_ok()

{

println!("Thread 1 is first!");

}

// Thread 2

if atomic

.compare_exchange(0, 2, Ordering::Relaxed, Ordering::Relaxed)

.is_ok()

{

println!("Thread 2 is first!");

}Memory Order

You may have noticed that the previous examples have all used this extra Ordering::Relaxed argument. These Orderings are part of the C++20 memory model for atomics, which Rust has simply copied with a few minor tweaks.

But what do these orderings actually do? Wasn’t the point of Atomics, that they provide atomic operations by themselves, so what is this extra argument for? This is the part that I’m still trying to wrap my head around. I’ll try to explain my current understanding of these. This understanding may not be correct! You have been warned! But I will keep updating this post to reflect my current understanding and to keep this as a reference.

So far as I can tell, the memory Ordering is actually pretty much irrelevant, as long as we’re talking about synchronizing through a single atomic variable. As mentioned earlier, the atomic operations are all indeed atomic, no matter what ordering is used. And they will eventually be seen by all other threads. So as long as no other shared memory depends on the value of an atomic, Ordering::Relaxed is fine to use!

What does this mean in practice? In our earlier examples, the threads communicated entirely through the given “atomic” variable. They only exchanged the value inside the atomic, nothing else.

Therefore using Ordering::Relaxed is fine to use.

However, what happens if we also change another value (atomic or not), maybe in a producer-consumer fashion?

let value = AtomicI32::new(0);

let has_value = AtomicBool::new(false);

// Thread 1 (Producer)

value.store(5, Ordering::Relaxed);

has_value.store(true, Ordering::Relaxed);

// Thread 2 (Consumer)

while !has_value.load(Ordering::Relaxed) { std::hint::spin_loop(); }

let value = value.load(Ordering::Relaxed);

println!("The value is {value}");From what I’ve gathered, this code seems correct at first, but actually isn’t! It’s entirely possible that the consumer thread may read the value as 0. But how can this be? We’re assigning “value” to 5 before we assign “has_value”. Right? Right?!

Unfortunately, the hardware may actually not do as requested. Modern CPUs can arbitrarily reorder instructions to achieve a better utilization of their individual cores. As the stores to “value” and “has_value” don’t have a data-dependency between them (i.e. the store to “has_value” doesn’t need to know what’s inside “value” to succeed), they may be reordered by the CPU to execute the store to “has_value” first.

These loads and stores will still be atomic, so the consumer thread will still always read either “0” or “5” and never “1” or another undefined value. But the point is that even with atomics, operations that happen to two different memory locations (atomic or not) are not synchronized.

Note that this is only the case on weakly-ordered Hardware (i.e. ARM). x86/x64 e.g. is strongly-ordered, so it provides AcquireRelease semantics automatically for each load & store operation. Which makes the problem even worse, as this will work correctly on one machine, but results in a data race on another!

I’ve also only been able to prove a data-race on ARM when going through a pointer indirection (i.e. “value” is an AtomicPtr to an int). I still believe the example above is technically allowed to produce a data race, even though it doesn’t on my machine.

Solving these problems is what these memory ordering arguments are for. They’re for specifying what should happen to other memory accesses before/after an access to the atomic.

There currently exist 5 of these orderings in Rust:

- Relaxed

- Acquire

- Release

- Acquire Release (AcqRel)

- Sequentially Consistent (SeqCst)

There is an additional one in C++, which I won’t cover.

Sequentially Consistent

This is the most easy-to-use and strongest ordering. It basically means “do not reorder anything around this instruction”. Anything that happens after this instruction will happen after it and anything that happens before it will happen before it.

If in doubt, this is the go-to ordering, as it’s the strongest. If you use this for all your atomic operations, your program should execute in the intended and intuitive order.

Acquire & Release

These orderings are for “acquiring” data from another thread and “releasing” data to another thread respectively.

So if we update an atomic that signifies another thread to read data we modified, we’ll want to use “Release”. In the previous example, this would be the “has_value” variable.

More formally, a store with “Release” ordering guarantees that any memory access that happened before it (in the example the store to “value”) does indeed happen before, even for non-atomic variables or atomics that used “Relaxed” ordering. However, any access that happens after the “Release” operation may actually happen before it.

Especially this last part has been counter-intuitive for me. How is it okay to move another operation before this store? Imagine this example code:

value.store(5, Ordering::Relaxed);

has_value.store(true, Ordering::Release);

value.store(10, Ordering::Relaxed);Here the store to set “value” to 10 may actually happen before the store to has_value. But this doesn’t change the behavior of another thread observing the “has_value” variable. After “has_value” is true, the value can be either 5 or 10, the other thread can always read either value. As previously stated, there are no atomic guarantees between atomic operations. So from another threads perspective, no matter what we do, the “value” will be set to 10 at “some point” and there’s no way for us to know when exactly by looking at “has_value”. Whenever we check “has_value” the “value” may have already changed to 10. Basically any access to “value” before checking “has_value” may be 0,5, or 10. The only thing we know after checking that “has_value” is true is that it’s 5, or 10 but never 0.

value.store(5, Ordering::Relaxed);

value.store(10, Ordering::Relaxed);

has_value.store(true, Ordering::Release);But even if we check has_value, that doesn’t mean that our access to “value” will actually happen “after” that check. The CPU may still freely reorder these two calls to load “value” first. That is where “Acquire” comes in. Acquire is used to make sure that any operation done after this one actually stays after it. Instructions that happened before may still be reordered to happen after, using the same logic as described for Release.

Acquire also only makes sense when paired with a previous store with “Release”. As only then do we know that certain operations have definitely happened before we read “has_value” variable and that we’re definitely reading the results after this has happened.

The thing to keep in mind with all of this is that this affects the entire memory of the CPU, not just the atomic, so that’s where the real use-case is. To make sure “other memory” is synchronized correctly, not the atomic itself.

This blog post also does a great job of explaining how this happens on a CPU level: https://fy.blackhats.net.au/blog/2019-07-16-cpu-atomics-and-orderings-explained/.

So if we were to rewrite the previous example correctly, we would have to use “Release” when setting the “has_value” variable and “Acquire” when reading from it.

let value = AtomicI32::new(0);

let has_value = AtomicBool::new(false);

// Thread 1 (Producer)

value.store(5, Ordering::Relaxed);

has_value.store(true, Ordering::Release);

// Thread 2 (Consumer)

while !has_value.load(Ordering::Acquire) { std::hint::spin_loop(); }

let value = value.load(Ordering::Relaxed);

println!("The value is {value}");And there we have it, a consumer-producer system that correctly uses atomics.

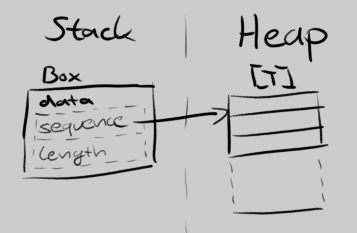

![Possible memory layout for a Box<[T]>, interpretes a Box as a container storing a single pointer onto the heap. It points to a memory cell on the heap, containing both the pointer to the sequence and it's length.](https://codecrash.me/wp-content/uploads/2020/07/Possible-Box-Layout.png)